Sunset Quality Predictor

Predicts the quality of the sunset before it happens

A system for predicting the visual quality of the sunset in New York City before it happens, using machine learning and image classification to analyze the images of the sky over Manhattan throughout the day to make a prediction from one to five stars.

Collecting images

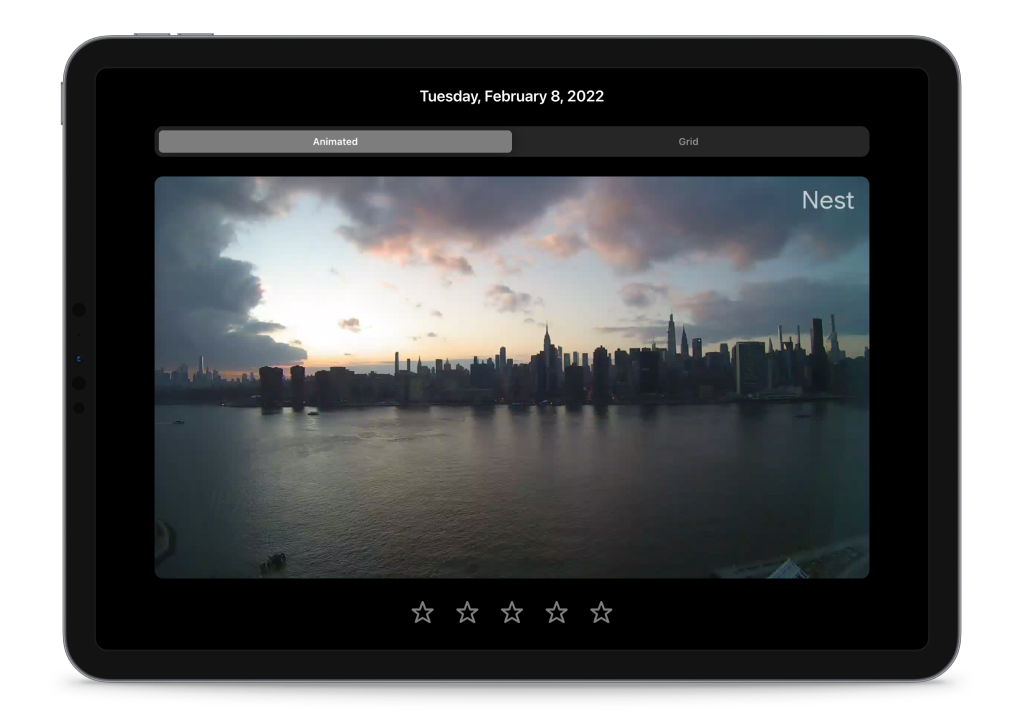

A Nest camera is positioned so that it's pointing west at the Manhattan skyline. Using a cronjob on a Raspberry Pi, a snapshot is requested from the Nest camera every minute and saved to an SSD connected to the Raspberry Pi.

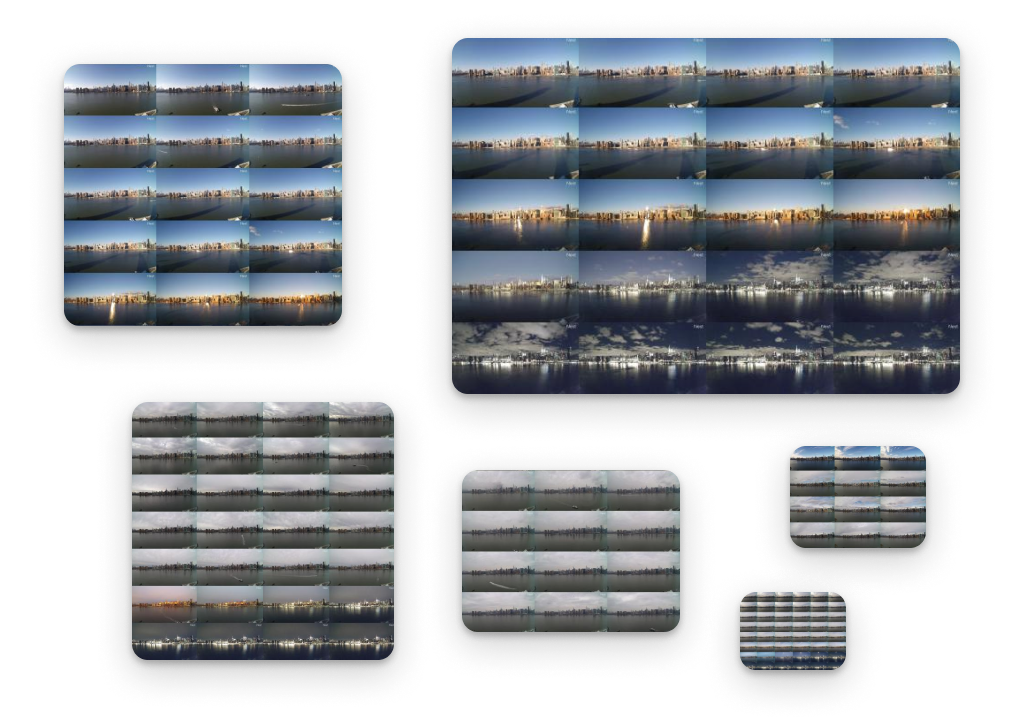

The images are compiled into composite grid images of what the sky looks like throughout the day, with one snapshot for every 15 minutes from four hours before sunset to one hour before sunset.

Building training data

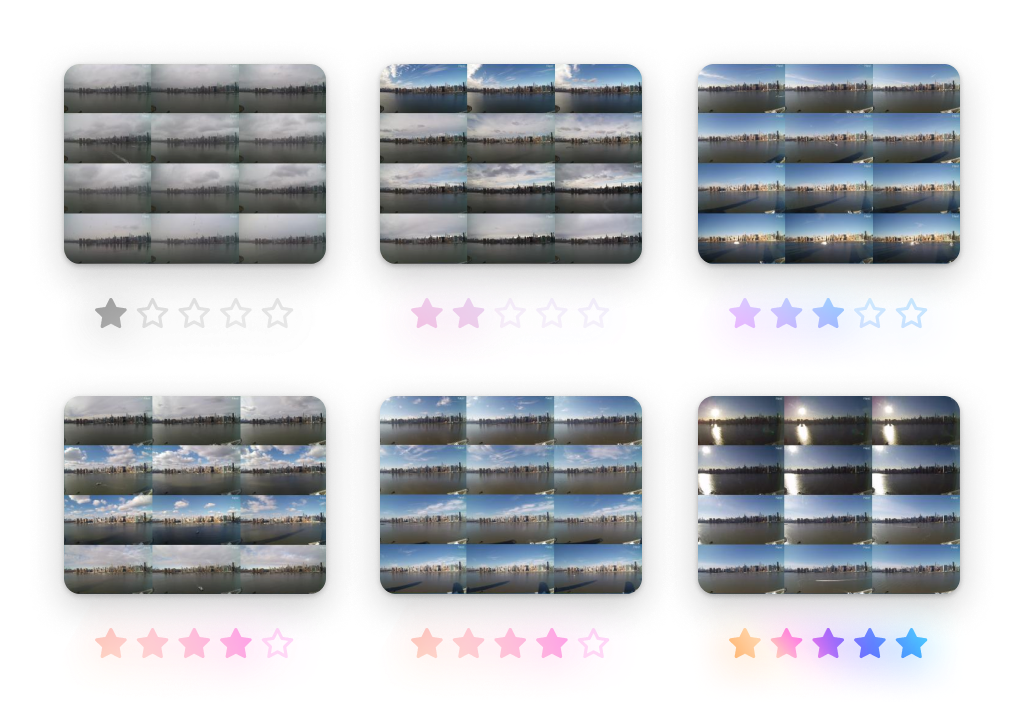

Friends and family were invited to rate sunsets from one to five stars, representing their opinion of the visual quality and beauty of each sunset. They can view the sunset either as an animated loop or grid of snapshots of before and after sunset time. The ratings are saved into an Airtable database for further processing.

Training the model

The croudsourced sunset ratings are averaged out and matched to that day's composite image. These composite images with ratings are then used to train an image classification model, using TensorFlow.js and transfer learning to re-train a MobileNet base model.

Making predictions

Every day on hour before sunset, a server runs the model, which looks at the composite image of the sky for that day, makes a prediction, and generates an image with the date, star rating, and confidence level and posts it to the @nycsunsetbot Instagram page and updates a website.

Collecting images

A Nest camera is positioned so that it's pointing west at the Manhattan skyline. Using a cronjob on a Raspberry Pi, a snapshot is requested from the Nest camera every minute and saved to an SSD connected to the Raspberry Pi.

The images are compiled into composite grid images of what the sky looks like throughout the day, with one snapshot for every 15 minutes from four hours before sunset to one hour before sunset.

Building training data

Friends and family were invited to rate sunsets from one to five stars, representing their opinion of the visual quality and beauty of each sunset. They can view the sunset either as an animated loop or grid of snapshots of before and after sunset time. The ratings are saved into an Airtable database for further processing.

Training the model

The croudsourced sunset ratings are averaged out and matched to that day's composite image. These composite images with ratings are then used to train an image classification model, using TensorFlow.js and transfer learning to re-train a MobileNet base model.

Making predictions

Every day on hour before sunset, a server runs the model, which looks at the composite image of the sky for that day, makes a prediction, and generates an image with the date, star rating, and confidence level and posts it to the @nycsunsetbot Instagram page and updates a website.